Daniel Kofler

AutoCompyute

Lightweight Autograd Engine in Python

AutoCompyute is a deep learning library that provides automatic differentiation using only NumPy as the backend

for computation (CuPy can be used as a drop-in

replacement

for NumPy). It is designed for simplicity and

performance and enables you to train deep learning models with minimal dependencies while leveraging GPU

acceleration. The package supports:

- Tensor operations with gradient tracking.

- Neural network layers and loss functions.

- Performant computation for both CPU and GPU.

- A focus on clarity and simplicity.

Under the Hood

At its core, AutoCompyute features a Tensor object for storing data and gradients, and

Op objects for defining

differentiable operations.

The Tensor object is the fundamental data structure in this autograd engine. It holds

numerical data as a NumPy array and remembers, how it was created by keeping a reference to the Op

that created it.It's most important attributes are:

data: A NumPy array containing the numerical values.grad: A NumPy array holding the computed gradients (initialized as None, filled by

calling Tensor.backward()).ctx: Stores a reference to the operation (Op) that created this tensor.src: References the parent tensors involved in the creation of the tensor.

The Op object represents a differentiable operation applied to tensors. Each operation implements

both a forward and backward pass.

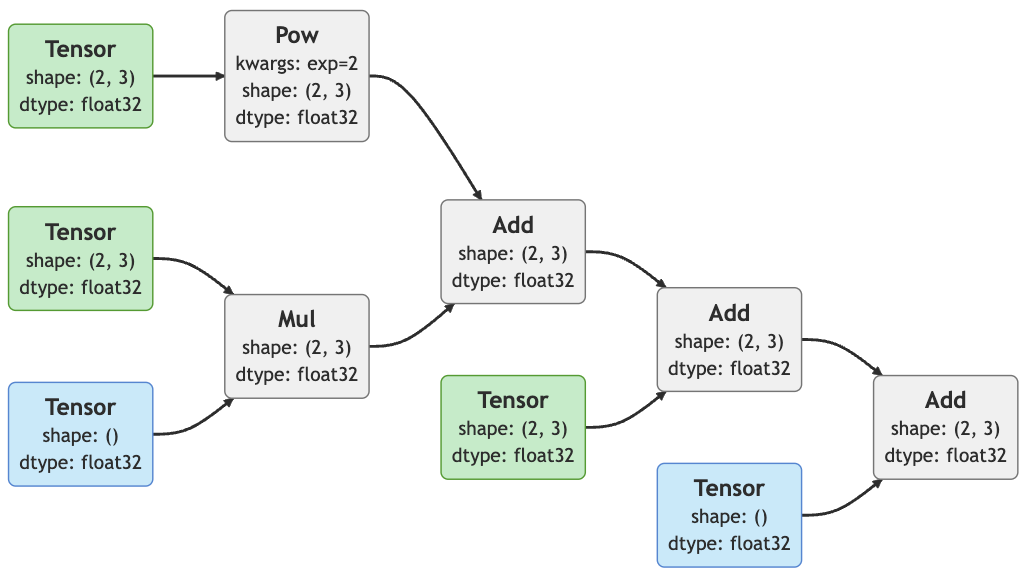

How the Engine Works

-

Computation Graph Construction: When an operation (e.g.,

Tensor.add) is called, an

Op

instance is created. It performs the forward computation while also caching intermediate values that are

required for the backward pass. The resulting output tensor maintains references to the Op and

parent tensors, forming a

computational graph.

- Backpropagation: Calling

backward() on the final tensor (e.g. a loss value)

initiates gradient

computation. The gradients propagate in reverse through the computational graph by calling

backward() on each Op, which distributes gradients to parent tensors.

- Gradient Storage: As the gradients are propagated, they are stored in the

grad

attribute of

each

Tensor, enabling later parameter updates for optimization.

Computational graph construction in AutoCompyute

Computational graph construction in AutoCompyute